AI is now being broadly integrated in many industries, and video conferencing equipment is no exception. With the introduction of AI chipsets to cameras and audio devices, how will AI affect video recording and our experiences with virtual collaboration?

AVer integrates advanced AI features into its video conferencing, Pro AV, and medical-grade solutions for hybrid workspaces, HyFlex learning spaces, large-venue events and exhibitions, professional broadcasting, and hospital wards. AI-powered technology sharpens video and audio quality, automates participant tracking, and improves meeting engagement and efficiency, all while also being capable of monitoring patient safety.

With that in mind, here’s a compendium of AVer’s AI features for tracking and framing applications. Let’s take a closer look at what they do.

AI-Powered Camera Features

Auto Framing

Sophisticated AI algorithms are leading the charge with their ability to enhance functions like Auto Framing. These algorithms enable cameras to capture subjects automatically and accurately, even in challenging conditions. This means no more blurry images in video conferencing and multiple people all remaining clear within the frame.

AVer’s SmartFrame technology automatically adjusts the camera’s field of view (FOV) to fit all participants in a meeting. This feature simplifies the setup process by eliminating the need for manual adjustments, allowing users to start their meetings quickly and efficiently.

More specifically, depending on different video conferencing attendee headcounts and meeting room sizes, AVer also has Smart Composition and Smart Gallery framing technologies to cater to various types of hybrid conferencing needs. More details on these can be found here.

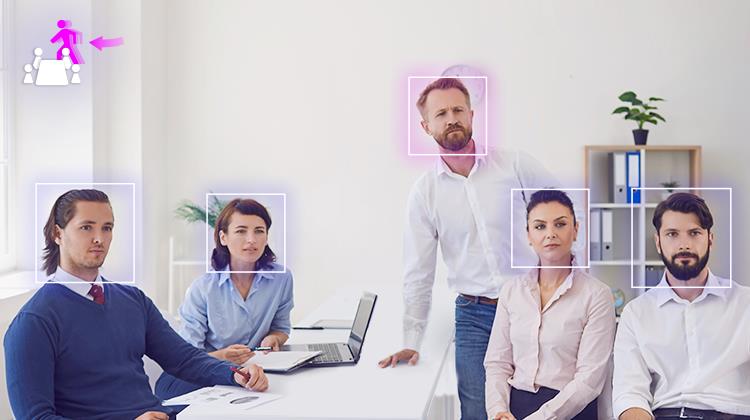

Auto Tracking

AI Auto Tracking uses AVer’s own patent algorithm and human detection technologies to automatically detect, follow, and focus on speakers to make sure they remain centered in the frame. With AI, smooth and uninterrupted tracking is possible during lectures, video conferences, and live events, even in complex scenarios with dynamic movements.

AVer’s range of Auto Tracking cameras, including the TR615 and TR535N, come with a combination of various tracking capabilities, such as Presenter, Zone, Hybrid, and Segment Modes. These modes use human detection processing to follow presenters accurately, supporting applications from education to live events and corporate video conferences.

- Presenter Mode keeps the speaker in sharp focus, even as they move around, making them the central figure in the scene.

- Zone Mode is tailored for static content, capturing fixed subjects with precision and clarity.

- Hybrid Mode blends the strengths of Presenter and Zone Modes, adapting effortlessly to dynamic environments and unpredictable movements.

- Segment Mode offers a customizable approach to tracking and allows users to designate specific points of interest within a wider area, which can reduce the need for multiple cameras and lower production costs.

AVer’s cameras utilize advanced Human Detection AI, which can distinguish between people and other moving objects, such as rustling curtains or passersby. This technology, trained to identify human shapes and characteristics, reduces unnecessary focus shifts and minimizes distractions, ensuring the camera stays focused on the intended subject.

Going a step further, AVer’s medical-grade cameras take patient safety to the next level with AI-powered fall detection. The AVer MD120UI, for example, features an integrated Edge-AI computer vision model that detects abnormal events within patient wards.

If a patient falls within the camera’s view, the system automatically alerts nursing station staff, ensuring timely intervention. Notably, this intelligent feature is integrated into the camera itself, streamlining patient monitoring and eliminating the need for additional sensor pads or wearable devices.

Dynamic Detection

AI has revolutionized conferencing cameras by executing functions with moving objects in real time. Cameras swiftly recognize various objects or people as they appear in the frame and optimize settings on the spot.

One example is Dynamic Detection. When used in cameras like the AVer CAM570, adjustments to visual changes in a meeting all get recorded seamlessly thanks to a dual-lens system that provides a comprehensive view of the room while accurately tracking movement in real time.

When a new person enters the room, the secondary AI-powered lens alerts the primary PTZ (Pan Tilt Zoom) camera to adjust its focus, instantly reframing the scene to include all participants and facilitating uninterrupted collaboration.

AI Audio Processing

We’ve all been there — trying to focus on a conference call while being distracted by surrounding noises. AI-powered speakerphones, such as the ceiling-mounted AVer FONE700, use noise suppression techniques to deliver crystal-clear audio for all participants, whether they’re in the room or joining remotely.

Here are the features that make conference calls sound better than ever:

Acoustic Echo Cancellation

AEC detects and removes the delayed sound of an individual’s voice picked up by a microphone, allowing for clearer conversations without the distraction of echoes.

Advanced Noise Reduction

Algorithms analyze the noise characteristics and apply filters and adjustments to reduce noise without significantly affecting the original content.

Automatic Gain Control

AGC automatically optimizes the signal to maintain a consistent output level to compensate for fluctuations in input signal strength.

Double-Talk Detection

Uses a combination of audio signal processing algorithms and machine learning techniques to detect when double-talk is occurring when two or more people are speaking at the same time, then automatically adjusts the audio signal to prevent echo and feedback.

Reverberation Reduction

Reverberation refers to the persistence of sound after the original sound has stopped. Reducing reverberation helps to eliminate the echoey or hollow sound that can be caused by reverberation, resulting in a clearer and more intelligible audio signal.

Audio Fence

Uses advanced audio signal processing algorithms to create a virtual “fence” around the voices of the participants in a conference call and separates the voices from the background noise and other unwanted sounds.

AI Audio Tracking

When it comes to keeping track of a conversation, AI audio and video auto tracking work together to do the heavy lifting of tracking an ongoing conversation. With AI human voice recognition taking the lead, it directs the camera to automatically track and smoothly switch to the person who is actively speaking. That way, remote participants stay engaged and feel included in the conversation.

Voice Tracking Mode

Refers to the ability of a microphone system to follow and focus on the voice of the person speaking. In coordination with a camera, this means that when more people join the conversation, the camera switches to auto-framing mode and captures everyone in the meeting. This reduces camera movement and provides smoother footage for remote participants.

Preset Framing

In a presentation scenario, you can designate a specific area for the camera to focus on. Preset framing frames the preset zone if it detects human presence in the preset area, beneficial for hybrid presentations and demonstrations. If AI detects a human voice outside this preset zone, it automatically frames them so that remote participants can see the active speakers. If no human voice is detected for five minutes, the camera automatically returns to the preset area.

Dynamic Beamforming

Adjusts the direction of signal transmission in real-time and picks up resonant voices. AVer’s VB350 video bar, for example, can pick up and reproduce resonant voices from up to 10 meters away. This enhances audio quality and reduces interference, making it more effective in environments with multiple sound sources.

AI Video Conferencing Moving Forward

AI integration in cameras and audio equipment has transformed and enriched how people interact virtually — this is merely the beginning for changes to come. From here on, more immersive and dynamic features will take virtual collaboration up a level.

The recent introduction of Google Beam has brought a glimpse of this concept to life. It uses AI, 3D imaging, and light field rendering to offer a highly immersive, true-to-life video experience that simulates the sensation of in-person interaction without the need for headsets, glasses, or wearable devices.

Another area for breakthroughs is augmented reality (AR) and extended reality (XR) technologies that use AI to seamlessly merge virtual elements with the real world. This holds tremendous promise for future meeting applications, such as integrating 3D design plans, overlaying relevant data and information onto real-world objects, or enhancing video conferencing with virtual whiteboards and interactive displays.